A tale, told by a fool, full of sound and fury, signifying safe data ...

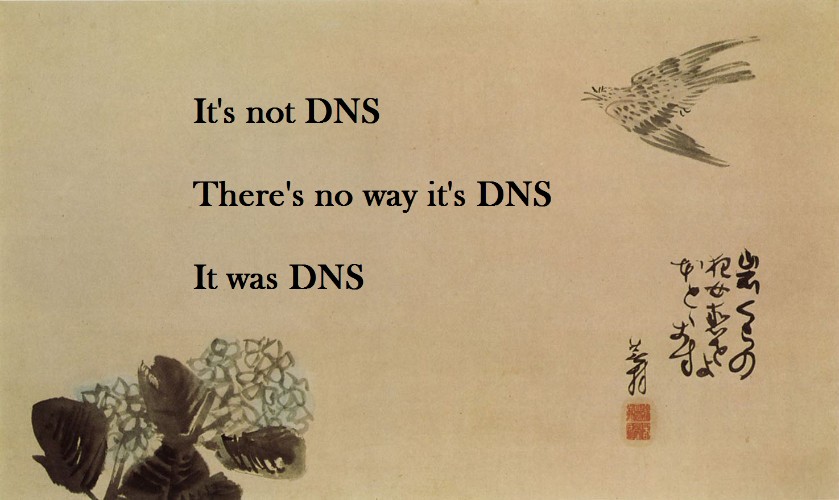

About two weeks ago, I noticed that my DNS was slow

and this didn't make sense to me. DNS for me runs on two VMs, on two different machines. If one is down, the other (easily) handles the load.

It just couldn't have been DNS that was the problem.

Well, more precisely, it was one of the two DNS servers. It was sluggish, basically non-responsive.

That also didn't make sense, as it is running on a 128 GB RAM AMD Epyc unit, with a zfs RAID10 equivalent (stripe across mirrored pairs) providing the backing store for the VMs.

I noted that all the VMs were slow/non-responsive. I hadn't received an alert (my bad, left few things unconnected, fixing in short order).

Ok. Start out with a node reboot, maybe its got cruft in it from being up a while. Reboot took quite a while as libvirtd couldn't shut down the VMs nicely.

Once the unit was back up, I thought lets look at the zpool status output.

Of the 5 drives in my striped mirror, 3 ... 3!!! ... were marked as offline and in need of potential replacement. The hot spare hadn't been substituted in. So likely I was looking at massive irreparable data loss.

I saw it was trying to recover and resilver. So I thought about how zfs works. It checksums everything (effectively). Which means if you have a read/write going amiss, it will detect it. And attempt to fix it.

So why wasn't it fixing it?

I looked at the unit, and realized that I had bought the SAS card the array was running on in 2017. And there were big thunderstorms a day or two before I'd noticed the slowdown.

Could it be that the thunderstorms fried the card, or motherboard, or disks, or cables?

Possible. Simple diagnostic method, start replacing the most obvious thing first, and observe any change. So I got a replacement SAS card today. LSI ... er ... Broadcom 9305-16i. Swapping out the old card (a 9300-8i) for this, and booting the unit. I am rewarded with the following view:

zpool status -v

pool: storage

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat May 17 19:28:22 2025

10.7T / 12.8T scanned at 19.1G/s, 5.27M / 2.12T issued at 9.38K/s

5.27M resilvered, 0.00% done, no estimated completion time

config:

NAME STATE READ WRITE CKSUM

storage ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-ST14000NM001G-2KJ103_ZL2H871A ONLINE 0 0 0

ata-ST14000NM001G-2KJ103_ZL2HBL8G ONLINE 0 0 2 (resilvering)

mirror-1 ONLINE 0 0 0

ata-ST14000NM001G-2KJ103_ZL2HCAQ7 ONLINE 0 0 0

ata-ST14000NM001G-2KJ103_ZL2HCP50 ONLINE 0 0 0

spares

ata-ST14000NM001G-2KJ103_ZL2HQJ4W AVAIL

errors: Permanent errors have been detected in the following files:

<metadata>:<0x0>

/storage/data/machines/nyble/data/platform-image/platform_build/vmlinuz-4.15.0-1034-oem

/storage/data/machines/nyble/data/platform-image/scripts/lsbr.pl

/storage/data/machines/nyble/data/platform-image/scripts/spark

/storage/data/machines/nyble/data/platform-image/usb/bootoptions

/storage/data/machines/nyble/data/platform-image/usb/mbr.bin

/storage/data/machines/nyble/data/tiburon/tb.tar.bz2

/storage/data/machines/nyble/data/platform-image/platform_build/initramfs-ramboot-4.15.0-1034-oem

Happily the permanent errors are in files I should have cleaned up ages ago. After checking it again, the resilvering is complete.

About 7 years ago, I decided to go zfs for large storage on this machine. I was convinced to after a massive data loss on xfs (only one I ever had) on a large software RAID6. I was able to recover 75% of my data or so, but lost some things I didn't want to lose. I figured zfs would likely provide better protection.

It does, and it did.

I've increased entropy in the universe (losing data irretrievably), but not anxiety. I'll call that a win!

Comments ()