In undergrad (Stony Brook), I had a math teacher (numerical methods class) who talked about this package he used in his research. Macsyma was a symbolic math package, sometimes called a computer algebra tool.

Of course, seeing the likely utility of this in some of my courses (I am still kicking myself for not completing 2 more math classes for a minor in math), I asked if I could get access to it. He told me, in no uncertain terms, that it was a bad idea, as I needed to develop the understanding and skills to work without such a tool.

20 year old me was dissappointed, and resigned to doing things without this modern marvel. Long hand. I was at the time, developing my skills. He (Eric) was right. You need to be able to have insight, to have sufficient experience in working with specific systems to be able to have intuition as to whether the answer a tool like Macsyma reports is correct.

A few years later, in graduate school (Physics), Macsyma was installed on the department's DEC Vax 8650. I used it a bit in my Statistical Mechanics class (Stat Mech, or as we sometimes called it, S&M ... I was 23-24, such was the state of my humor in those days). We were studying Ising and Potts models (very similar math to various bits of machine learning), and playing with Hamiltonians with varying interaction models and geometries. Part of the class was simulation of our results, and I had some ideas to test about short-cut approximations to various measurable (expectation) values. So I played with Macsyma. And I used the fortran(%) directive once I had the form I needed.

Yes, I wrote lots of Fortran back then. Fortran 77 to be more precise. It was a tool, and I used it. Most of the code I worked with was written by others in Fortran, and I worked on extending them, or writing my own. In Fortran.

Its a tool. Like Macsyma. It should help you acheive a goal.

Later in grad school, my advisor bought a license for Maple, and I used that for some of the hairier manipulations I needed. We didn't have Macsyma available. I did have an ancient copy of MuMath around, but found programming that to be ... a royal pain. I felt nostalgic for MuMath a while ago, and looked online. Seems to have gone away around late 1990s.

The high energy physics (HEP) community had a number of CAT software that they developed to help them manipulate their Lagrangian. Their tooling was designed for a specific system, and not generally usable for me in grad school. I liked Maple, but it had its own limitations.

In the early 1990s, Mathematica was relatively new, and we had Maple, so I never played with it. I did look over its syntax, and had some cringe as a result. Then again, when I joined my thesis advisor's group in 1990, I used MathCad to perform what amounted to a "by hand" parameter optimization (loss function minimization in the parlance of today). My advisor had coded this up herself, and was using this as a tool to do a parameter search to match semiconductor band shapes/features to experimental observations.

It would have been a better use of time/effort to rewrite all of this (in Fortran). Probably a week of dev, a week of sanity checking, and then a series of runs. I'm sure the data would make more sense. As it turns out, there was a very stiff set of functions we were dealing with, and the band structure wound up very flat at the top of the valence band. This meant that the electrons populating those levels were very "massive", in the sense that it would require significant energy to change their momentum.

I remember thinking this was wrong, and I asked my advisor about this. I don't recall the specific reply, but I do recall the general sentiment was "don't worry about this so much, it was more about training me on the tooling".

Later while working, I needed estimates of error associated with numerical forward/reverse transforms. Specifically radial Fourier transforms. I was working on trying to provide error bounds for this computation. I tried this in Maple, and wasn't able to get it to give me a closed form solution.

I wound up coding it up (in Fortran), and plotted the data using Gnuplot. I selected my error bounds, and that dictated the minimum number of points for the transform. My thesis has an appendix on this computation and the associated integral I had to do. I've always enjoyed working on them, and I have to say what helped here was my 4th edition of Gradshteyn and Rhyzik. Since given to my daughter as she pursues a Ph.D. in Applied Math. One of the transforms I used ended up in a form I could find in the tables of integrals.

Again, no Macsyma, no CAT helped here. I had wanted them to, but I understood the math and physics a bit better than the tooling, so I had a better idea of what I wanted. I didn't blindly take computer tool output as the word of the almighty math deities. One should generally never do that. ChatGPT and the furor around it, as one can get it to change answers (from right to wrong, or argue for things that are wrong, or ...).

Computers and software are only as good as their software, inputs/training. They do not "think", and do not have "intelligence" the way we (humans) understand it.

In 1997, Maxima was released as an open source rewrite of Macsyma. By then I wasn't doing much theory/math anymore.

In 2020, 55 year old me started (re)playing with my old research tools. My fortran code just worked. 30+ year old code. Could even read the big endian data I had from my workstation days. The code ran. Fast. I used to have to wait a week for 100 timesteps of my molecular dynamics code (1992). Now, on my laptop (2020), 100 seconds.

I didn't have maple. I have (or had) many 5.25 inch floppies with my code on them. And many of the smaller floppies. I don't have a working 5.25 inch floppy drive. I've got 3 in the basement, but they don't work. Luckily, I moved most of my code and research bits to the 3.5 inch floppies. I have a USB reader for those.

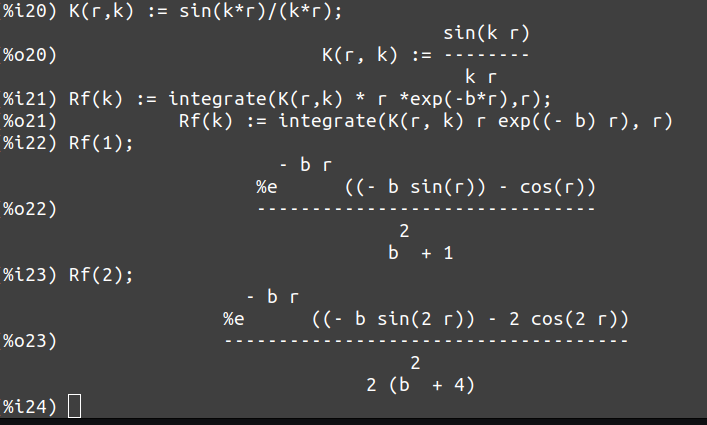

I wanted to play more with these tools. So I added Maxima into my analytics tool base. I spent some time playing with its integral capability.

Its a very powerful tool.

This is semi relevant, as I follow Integrals Bot on twitter. And I enjoy looking at the integral, and seeing if I can see a path to the solution. Integrals Bot posts some you can see the approach quickly. And sometimes ... usually with inverse trig functions, they are hard.

I'm in good company on this, as @nntaleb occasionally posts his solutions. After seeing these, the now 57 year old me wonders how much math I've lost from not using it daily. I play with some of the integrals during down moments, with Maxima.

So, I've been spending some time, brushing up on this tooling. And relearning things that I've learned before, that have not aged well in my brain.

Its a tool, but one that you need expertise to use. I don't blindly trust the output. I use it to spur additional thought.

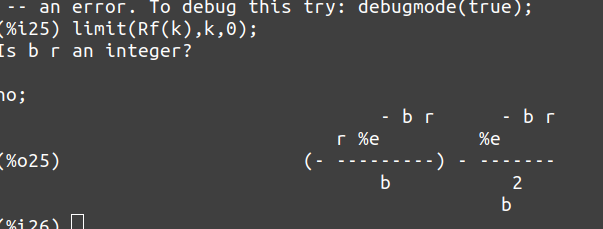

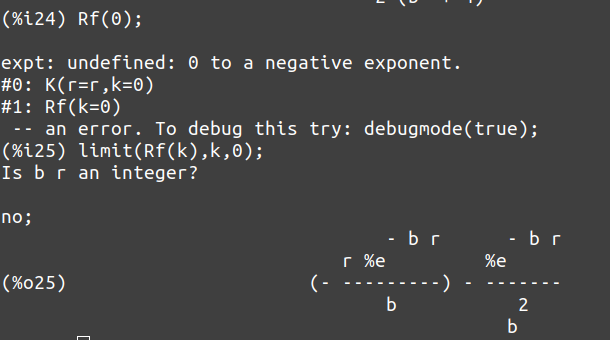

Because, as I showed previously ...

Without knowing math, asking for the value of Rf(k=0) would report an error. This is due to the factor of 1/k that is undefined at k=0. But the limit is defined.

All tools; search, chatGPT, dallE, etc. can happily generate insane output. You need to be smart, and not blindly trust the output.