HPC in the first decade of a new millenium: a perspective, part 1

By joe

- 3 minutes read - 522 words[Update: 9-Jan-2010] Link fixed, thanks Shehjar! This is sort of another itch I need to scratch. Please bear with me. This is a long read, and I am breaking it up into multiple posts so you don’t have to read this as a huge novel in and of itself. Many excellent blogs and news sites are giving perspectives on 2009. Magazine sites are talking about the hits in HPC over the last year in computing, storage, networking. You can’t ignore the year itself, and I won’t. Doug Eadline’s piece is (as always) worth a careful read. I want to look at a bigger picture though than the last year. The last decade. My reasoning? A famous quote by George Santayana. Ok, we aren’t doomed to repeat our past, but something very remarkable is going on in the market now. Something that has happened before, and under similar circumstances. Let me explain. In the beginning …

At the close of 1999, many things were happening in the HPC market. Things that would quickly reshape the organization of this market, how people made money in the market, how systems were designed, how code was written and run. Ask an SGIer (well most of them, I and a few others were exceptions at that time) then would commodity machines ever replace the distributed shared memory model for HPC … and the answer you would hear from them was an unequivocal, resounding … even scornful … no. Ask the same questions of an IBMer, an HP-ite, a Sun person, a DEC person. You’d get largely the same answers. HPC as a market was finishing the transition from vector architectures. This is a story in and of itself, as vector machines dominated … no … ruled … early HPC. The super microprocessors ate their lunch, not on performance, but on cost per flop, and number of flops available per unit time per dollar (or other appropriate currency). To wit, in 1999, the processor landscape looked like this in HPC (generated from the excellent top500.org site)

[

](/images/processor-family-Nov-1999.png)

Indeed, if you leverage the historical charting capability of the top500 site, you arrive an inexorable conclusion.

[

](/images/processor-family-over-time.png)

That being, architectures come, and architectures go. In processors, in system design, etc. Moreover, you see consolidation. You can see, taking the slice at 1999 why all these large RISC cpu vendors would scoff at the little commodity machines. Its not like they were a threat now … were they? They were just cheap “Pee Cees” after all. How could they compete with the mighty SMP in HPC? (for added derision, they emphasized the “pee” portion)

[

](/images/system-architecture-over-time.png)

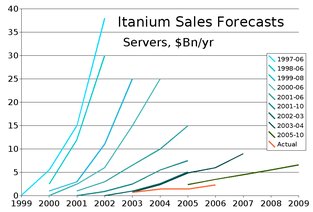

Aside from this, about half of these vendors had just signed on to the good ship Itanium, based in part upon some … er … creative … yeah, thats the ticket … market penetration data … manufactured … er … estimated by one of the market watchers …

[

](/images/Itanium_Sales_Forecasts_edit.png)

Yeah, in the brand new millenium, we would soon all be using VLIW based machines, and companies would be selling Billions and Billions of dollars of them. Yeah. Billions. Of. Dollars. Of Itanium.